Ever After Potato Problem

Potatoes, Ever After, Confabulation, and the AI Mandela Effect

Working with AI is fun because it is weird, and this week I stumbled across something weird. Bear with me, I promise this relates to AI...

On my Facebook account, I was discussing the etymology of the word "potato" with my friends (as one does) and how the origins of the word demonstrate that potatoes are a New World food. Like corn and tomatoes, potatoes originate from the Americas (as an aside, it is mind-blowing to me that Europe had guns before they had potatoes)... In one of the comment threads, some of my friends were commenting about anachronisms in movies that depict food, and one of them brought up the movie "Ever After," set in the 1500s, and how it depicts the characters eating bars of chocolate even though chocolate wouldn't have been in solid form like that until the 1800s. Wanting to bring the discussion back to potatoes, I thought it would be "fun" to see if "Ever After" also had potatoes in it.

Not remembering anything specifically about potatoes in the movie, I quickly asked ChatGPT and got the following response from GPT-4o:

Okay. I don’t remember that scene… But it seems pretty confident that Danielle talks about potatoes in this movie. Let’s ask o1-preview in a completely new chat…

Okay. Uh… Let’s ask ChatGPT 4o Search…

Claude Sonnet 3.5… Perplexity…

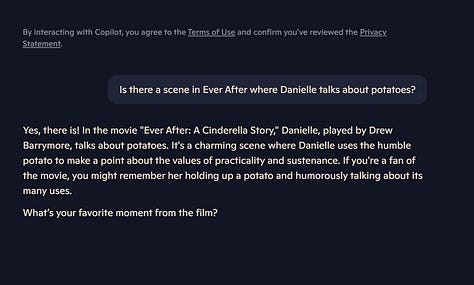

Gemini… Meta AI… Microsoft CoPilot…

ALL of the LLMs agree that this potato scene exists. Even when I push the LLMs about the specifics and ask follow-up questions, most of them stay the course and confidently assert that this is FOR SURE a real scene; in fact, they almost universally insist that this potato scene is a very well-known and popular scene in the movie. On the surface, it seems plausible, and we seem to have consensus, so it must be true, right? The only problem is... this scene does not exist at all.

After searching through the screenplay there is no mention of the word "potato" anywhere in the script of "Ever After." I even rewatched the film just to be sure. There are no potatoes, that I could find, in this movie at all. Zero potatoes. How did every single LLM get this so consistently wrong?

LLMs work BECAUSE of hallucinations, so it shouldn't shock us when the hallucination machine hallucinates things. I do, however, find it concerning that the state-of-the-art AI-powered searches that folks are holding up as viable replacements for more traditional search engines, still got it wrong. RAG appears to really be the worst after all... The thing that struck me most, though, was how similar these results were to an LLM equivalent of the Mandela Effect: a shared, pervasive, false memory that all LLMs seemed to share.

The Mandela Effect is a "popularized phenomenon in which a group of people collectively misremember facts, events, or other details in a consistent manner."1 While it's named after false memories shared by a large group of people around the death of Nelson Mandela, other examples include remembering "Berenstein Bears" when it was in fact "Berenstain Bears," thinking that the Monopoly man is depicted with a monocle (he is not), or thinking that Darth Vader said "Luke, I am your father" when what he actually said was "No, I am your father." It's fascinating that LLMs seem to be prone to the same phenomenon about, weirdly enough, potatoes in "Ever After," insisting that this is a well-known scene in the movie.2 Somewhere in the ghost of the machine is a pathway or rut exists that makes the LLM susceptible to producing this specific weird potato scene.

I acknowledge that this is anecdotal; even so, this specific example illustrates an interesting issue with LLMs that isn't yet fully understood. Hidden "ruts" exist that steer the LLM towards confabulation and misinformation. Detecting and predicting these ruts is difficult and once you get into one of them, it's hard to get the LLM out again. Like I said, even when I argued with or questioned the LLMs about the potato scene, most of them continued to double down on insisting that the scene existed once they committed to it, even going so far as hallucinating detailed dialog and timestamps when the scene supposedly occurred. It seems like with these sorts of things, the more you talk about the false memory, the more likely the LLM is to double down on them and produce more output in that vein.3 It makes sense, these “ruts” seem to pop up all of the time when using LLMs as a coding assistant.4

Even though failings like this are all too common, people are actively advocating for swapping out Google Search as their default search engine in favor of ChatGPT Search. At least for now, this is irresponsible. Not just because folks are going to get bad hallucinated answers about pointless trivia but because people search the internet for answers to important questions they have about their health, the law, their business, money etc. It is widely established that people struggle to distinguish AI content from human content (only being about to detect AI content correctly about 50% of the time5) but it is less widely known to what degree people are able to distinguish a hallucinated inaccuracy from a true fact. A lot of inaccurate hallucinations pass the sniff test and this makes them very difficult to catch with casual LLM usage especially when used by people who don’t understand anything about what is going on technically.

We are setting up LLMs as all knowing oracles that have all the answers. A large percentage of the time we now know that the oracles lie. Even though we know, and add disclaimers to protect us legally, no one is taking the time to fact check and it seems like this going to lead to people getting hurt in various ways.

“Okay, but it’s not like I’m going to stop using AI Search”…

Fine. Here are some more practical takeaways:

LLMs are bad at obscure or extremely niche facts. In my anecdotal experience, questions that would have limited sources or wouldn't be easily answered by a Google search are more likely to generate incorrect responses and false hallucinations. Obviously, this severely limits the usefulness of LLMs as research assistants in many cases if you are looking for non-obvious sources. Across the board, LLMs seem to do a poor job of being able to consistently answer factual queries about movies, songs, TV shows, papers, api documentation, or books without being first fed copies of these things into their context as guide rails to keep them on track.

LLMs are more likely to be true for very popular or well established facts. This is sort of the reverse of the above bullet point. This is a double edge sword in some cases because very popular subjects will also have a lot of competing ideas around them which could in turn lead to confabulation as well. Particularly in writing code old patterns can emerge as ruts that the LLM will often fall into that are difficult to avoid.

The words you use in prompts are still important. Often times we will unintentionally steer the LLM down a path by the wording choices we use. In a sense we are leading the witness. Think about what key words you are putting in your searches and prompts, what regions in the model’s latent space this will activate, and what this will cause the LLM to pull into context when answering your question. For example, I noticed that for the “Ever After” potato question different wording sometimes gave me different results. Specifically if I left the name “Danielle” out of the prompt many of the LLMs no longer hallucinated the scene…

Ask one question, about one thing at a time to get better results. It’s tempting to type long, natural language, searches into these new search tools since this is one of the major differentiators that is advertised. In the current implementation of these tools the more concepts you bring into each search the more difficulty the tool seems to have pulling in enough context to answer the complete question accurately. This is partly why RAG is the worst. In my own searches, I have found that many times when the question gets too complicated or touches on too many topics at once the LLM will answer part of the question and then hallucinate the rest. For example, split two part questions into two separate questions posted within the same thread instead of asking it all at once.

Read and abide by the disclaimer Almost every single LLM or LLM powered search has a disclaimer somewhere that says they are prone to making mistakes. Believe them. Check the facts as they suggest.6

LLMs in general are bad at saying “I don’t know”. Just because an answer looks correct or seems plausible doesn’t mean that it is, in fact we should be suspicious when LLM responses match our expectations or answer our questions too conveniently… Typically when questions have a more definitive “no” or “yes” answer I find hallucinations to be less prevalent, it’s when the answer is less certain or more nuanced that the LLM feels the need to make up things and fill in the gaps.

Stuff sources into the context to get better results. If you want the LLM to give you a more accurate answer about something, even if that thing is well know, feed a copy of a reputable source directly to the AI to get more accurate answers. This is essentially what ChatGPT Search or Perplexity is trying to do for you automatically but they have stricter limits on context and what information they can pull in that, for the time being, is going to make them less effective than going straight to the sources ourselves. For example, If you feed a copy of the “Ever After” screenplay to ChatGPT or Claude they both accurately declare that their are no references to potatoes in the movie.7

Keep the length of your conversation in mind. Counter intuitively the longer your conversation with an LLM grows the more mundane, deterministic, and prone to hallucination the LLM seems to become. My guess is that this happens because the more we feed into an LLM’s context window the more we weight the conversation probabilistically to fall into a hallucination rut. We can actually use this to our advantage, if we stuff the context window with high quality primary sources, the output of the LLM becomes more weighted towards producing context similar to these high quality sources. It starts to become an issue, however, when the context window becomes full of the LLM’s own ramblings. Hallucinations are more likely to compound and lead to more hallucinations. (I believe this partially explains why LLM coding performance seems to drop off when conversations grow longer and why eventually starting a new conversation is the only way to get fresh more novel code without a ton of bad patterns taking over). This also explains why chain of thought approaches can only get your so far in many cases. A good general rule of thumb is the more the LLM talks, the more the context window fills up, the more deterministic and prone to ruts it becomes.

https://www.psychologytoday.com/us/basics/mandela-effect

https://www.britannica.com/science/Mandela-effect

For the life of me, I can't figure out what is causing it to get off track for this Ever After potato scene. There are no clear reasons for this to happen from the sources that Perplexity or ChatGPT Search give... It's not something commonly discussed among fans of the movie or something like that as far as I can tell.

A likely explanation as to why the chain of thought process used by o1-preview still fell into the trap is self prompting towards hallucination. It’s conversation about the scene with itself served to further reinforce and strengthen the hallucination within context.

When using LLMs as a coding assistant for web development a few of these annoying “ruts”, as I’m calling them here, constantly reemerge for me especially as conversations grow in length:

the tendency to use axios as a http library and rewrite perfectly good non-axios fetch calls to use axios

the tendency to hallucinate functions and npm modules

the tendency to become lazy and refuse to output fully functional code blocks, once it does this one or two times in a thread it will do it more and more even if I tell it to always output full blocks.

Humans can only detect AI text and images around 50% of the time; https://arxiv.org/abs/2403.16760

https://www.futurity.org/artificial-intelligence-writing-2900782/

Disclaimers from the major LLM providers:

ChatGPT: “ChatGPT can make mistakes. Check important info.“;

Claude: “Claude can make mistakes. Please double-check responses.“;

Meta AI: “Messages are generated by AI. Some may be inaccurate or inappropriate.”;

Gemini: “Gemini can make mistakes, so double-check it”

Archive of the full Ever After screenplay https://archive.org/details/ever-after-a-cinderella-story-1998

ChatGPT update. We have now jumped the shark and come full circle. With o3, when you ask this question it usually gets it correct because it pulls in my article when it does web searches. Interestingly enough if you ask it a question like "Is there a scene in "Ever After" where Danielle talks about corn?" other types of hallucinations still seem to happen:

"""

Nope—Danielle never mentions corn (or potatoes, for that matter). The only time corn even shows up is in a kitchen shot about 52 minutes in, where the servant Louise is juggling a pile of ears—no dialogue from Danielle at all.

Movie Mistakes

A fan who combed through the shooting script and re‑watched the film confirmed there isn’t a single occurrence of the word “corn” in any of Danielle’s lines—or anywhere in the script’s dialogue.

What Brain

"""

NOTE: this is a hallucination, even though it is correct. My article that it references doesn't talk about whether corn is in the dialogue or not. So it's use of the reference is wrong.

Deepseek R1:

Is there a scene in "Ever After" where Danielle talks about potatoes?

<think>

Okay, so I'm trying to figure out whether there's a scene in the movie "Ever

After" where Danielle talks about potatoes. I remember watching this movie a while

ago, but my memory isn't perfect, so I need to think through it carefully.

First, I should recall what "Ever After" is about. It's a 1998 film based on the

fairy tale "Cinderella," but with a more modern twist. The main character is

Danielle, played by Drew Barrymore. She's an intelligent and resourceful young

woman who works for her stepmother and stepsisters in a story set during the

French Revolution period.

Now, I need to remember if there was any mention of potatoes in the film. Let me

think about key scenes. There's the ball scene where Danielle goes as Cinderella

with the help of her fairy godmother. Then, there are the sequences showing her

interactions with the prince and her stepfamily.

Wait, wasn't there a part where Danielle is working in the kitchen? I believe she

was preparing food or doing chores. Maybe that's where potatoes come into play.

Potatoes were a common staple during that time period, so it makes sense they

would be part of meals.

I'm trying to visualize if she mentions potatoes specifically. Perhaps when she's

peeling them or cooking them. Since she often speaks her mind and is curious,

maybe she comments on how the stepsisters don't appreciate simple things like

potatoes, which are a source of comfort or nutrition.

Alternatively, it could be in a scene where she's explaining something to someone

else, using potatoes as an example. Or maybe when discussing meals, especially

since the story contrasts Danielle's simple life with the opulence of her

stepmother and stepsisters.

I don't recall any major scenes centered around potatoes, but given the historical

setting, it's plausible that they were part of daily life and thus mentioned in

passing or in a conversation about food. Since the user is asking if there's a

specific scene where she talks about them, I think it might be a minor detail

rather than a significant plot point.

To sum up, while potatoes aren't the focus of "Ever After," Danielle likely

mentions them in one of her kitchen scenes as part of her dialogue about daily

life or chores.

</think>

In "Ever After," there isn't a major scene dedicated to potatoes. However,

considering Danielle's role as a resourceful and intelligent character working in

the kitchen, it's plausible that she might mention potatoes in passing during her

conversations about daily tasks or meals. Potatoes, being a common staple of the

time, could have been part of her dialogue when discussing food or chores with

others. Thus, while not central to the plot, Danielle likely refers to potatoes in

minor kitchen scenes as part of her practical life discussions.

**Answer:** Yes, Danielle mentions potatoes in a scene where she is working in the

kitchen, reflecting on their role as a common and comforting part of daily life

during that period.